|

Bennett Wilburn

|

|

|

|

Professional Interests: My technical career includes microprocessor VLSI circuit design, architecting the world’s first 100-camera light field video camera array, and work in computational photography, computer graphics, and computer vision. My main research interest is systems for capturing the real world for graphics and computational photography applications. My recent experience has been in the commercialization of new technologies, and I aim to continue developing interesting technologies and creating innovative and compelling consumer products.

Recent History: After finishing my thesis and post-doctoral work in 2005, I spent half a year consulting with IPIX, working on technology to create a gigapixel video camera array for high-resolution real-time surveillance from unmanned aerial vehicles. This technology was eventually spun off into a startup called Argusight. After that I spent five years in the Visual Computing Group at Microsoft Research Asia in Beijing, China. I then jumped back into industry, working at Lytro on the world's first consumer light field camera. Recently I returned to Microsoft to work on cool, new gadgets.

|

|

|

Dissertation: High Performance Imaging Using Arrays of Inexpensive Cameras.

Research Affiliations while at Stanford:

- Stanford Multiple Camera Array project, headed by Marc Levoy.

- Mark Horowitz's VLSI Research group.

|

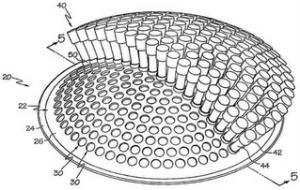

The Stanford Multiple Camera Array. My research focus at Stanford was high performance imaging using cheap image sensors. To explore the possibilities of inexpensive sensing, I designed the Stanford Multiple Camera Array, shown at left. The system uses MPEG video compression and IEEE1394 communication to capture minutes of video from over 100 CMOS image sensors using just four PC's. The array has been operational since February 2003, and since then we've developed our geometric and radiometric calibration pipelines and explored several applications. |

Selected Publications:

|

Photometric Stereo for Dynamic Surface Orientations. H.W. Kim, B. Wilburn, m. Ben-Ezra. Proc. European Conference on Computer Vision (ECCV), 2010. This works presents a photometric stereo (shape from active illumination) system and method for computing the time-varying shape of non-rigid objects of unknown and spatially varying materials. The prior art uses time-multiplexed illumination but assumes constant surface normals across several frames, fundamentally limiting the accuracy of the estimated normals. We explicitly account for time-varying surface orientations and show that for unknown Lambertian materials, five images are sufficient to recover surface orientation in one frame. Our optimized systems exploits the physical properties of typical cameras and LEDs to use just three images, and also facilitates frame-to-frame image alignment using standard optical flow methods, despite time-varying illumination. This video shows some examples of the fine shape detail we can capture with this system. |

|

Radiometric Calibration Using Temporal Irradiance Mixtures. B. Wilburn, H, Xu, Y. Matsushita. Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2008. We propose a new method for sampling camera response functions: temporally mixing two uncalibrated irradiances within a single exposure. Calibration methods rely on some known relationship between irradiance at the camera image plane and measured pixel intensities. Prior approaches use a color checker chart with known reflectances, registered images with different exposure ratios, or even the irradiance distribution along edges in the images. We show that temporally blending irradiances allows us to densely sample the camera response function with known relative irradiances. Our first method computes the camera response curve using temporal mixtures of two pixel intensities on an uncalibrated computer display. The second approach makes use of temporal irradiance mixtures caused by motion blur. Both methods require only one input image, although more images can be used for improved robustness to noise or to cover more of the response curve. We show that our methods compute accurate response functions for a variety of cameras. |

|

|

Stereo Reconstruction with Mixed Pixels Using Adaptice Over-Segmentation. Y. Taguchi, B. Wilburn, C.L. Zitnick. Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2008. We present an over-segmentation based, dense stereo estimation algorithm that jointly estimates segmentation and depth. For mixed pixels on segment boundaries, the algorithm computes the foreground opacity (alpha), as well as color and depth for the foreground and background. We model the scene as a collection of fronto-parallel planar segments and use a generative model for image formation that handles mixed pixels at segment boundaries. Our method iteratively updates the segmentation based on color, depth, and shape constraints using MAP estimation. Given a segmentation, the depth estimates are updated using belief propagation. We show that our method is competitive with the state-of-the-art based on the Middlebury stereo evaluation, and that it overcomes limitations of traditional segmentation based methods while properly handling mixed pixels. Z-keying results show the advantages of combining opacity and depth estimation. |

|

Surface Enhancement Using Real-Time Photometric Stereo and Reflectance Transformation. T. Malzbender, B. Wilburn, D. Gelb, and B. Ambrisco, Proc. Eurographics Symposium on Rendering 2006. Photometric stereo recovers per-pixel estimates of surface orientation from images of a surface under varying lighting conditions. Transforming reflectance based on recovered normal directions is useful for enhancing the appearance of subtle surface detail. We present the first system that achieves real-time photometric stereo and reflectance transformation. We also introduce new GPU-accelerated normal transformations that amplify shape detail. Our system allows users in fields such as forensics, archaeology and dermatology to investigate objects and surfaces by simply holding them in front of the camera. See this video for a summary of the work and a demonstration of the system. |

|

Synthetic Aperture Focusing Using a Shear-Warp Factorization of the Viewing Transform. Vaibhav Vaish, Gaurav Garg, Eino-Ville Talvala, Emilio Antunez, Bennnett Wilburn, Mark Horowitz, and Marc Levoy. Proc. Workshop on Advanced 3D Imaging for Safety and Security (in conjunction with CVPR 2005) Oral presentation. 2005). This paper analyzes the warps required for synthetic aperture photography using tilted focal planes and arbitrary camera configurations. We characterize the warps using a new rank-1 contraint that lets us focus on any plane, without having to perform metric calibration of the cameras. We show the advantages of this method with a real-time implementation using 30 cameras from the Stanford Multiple Camera Array. This video shows our results. |

|

High Performance Imaging Using Large Camera Arrays. Bennett Wilburn, Neel Joshi, Vaibhav Vaish, Eino-Ville Talvala, Emilio Antunez, Adam Barth, Andrew Adams, Mark Horowitz and Marc Levoy. (Presented at ACM Siggraph 2005). This paper describes the final 100 camera system. We also present applications enabled by the array's flexible mounting system, precise timing control, and processing power at each camera. These include high-resolution, high-dynamic-range video capture; spatiotemporal view interpolation (think Matrix Bullet Time effects, but with virtual camera trajectories chosen after filming); live, real-time synthetic aperture videography; non-linear synthetic aperture photography; and hybrid-aperture photography (read the paper for details!). Here's a video (70MB Quicktime) showing the system and most of these applications. |

|

High Speed Video Using A Dense Camera Array. Bennett Wilburn, Neel Joshi, Vaibhav Vaish, Marc Levoy and Mark Horowitz. Presented at CVPR 2004. We create a 1560fps video camera using 52 cameras from our array. Because we compress data in parallel at each camera, our system can stream indefinitely at this frame rate, eliminating the need for triggers. Here's the video of the popping balloon shown at left. The web page for the paper has several more videos. |

|

Using Plane + Parallax for Calibrating Dense Camera Arrays. Vaibhav Vaish, Bennett Wilburn and Marc Levoy. Presented at CVPR 2004. We present a method for plane + parallax calibration of planar camera arrays. This type of calibration is useful for light field rendering and synthetic aperture photography and is simpler and more robust than full geometric calibration. Synthetic aperture photography uses many cameras to simulate a single large aperture camera with a very shallow depth of field. By digitally focussing beyond partially occluding foreground objects like foliage, we can blur them away to reveal objects in the background. Here's an example Quicktime video. |

Older Publications: